In the realm of semiconductors, High Bandwidth Memory (HBM) has become a revolutionary tool transforming memory performance for applications requiring data-intensity. HBM offers greatly more bandwidth, reduced power consumption, and a small form factor, thereby providing a solution even if conventional memory technologies have struggled to meet the always rising needs of modern computing. But HBM distinguishes itself from other memory technologies mostly with its packing. New ideas and applications in several fields including artificial intelligence, machine learning, and high-performance computing (HPC) have resulted from hbm packaging technology divergence explained deviating greatly from standard techniques.

What is HBM (High Bandwidth Memory)?

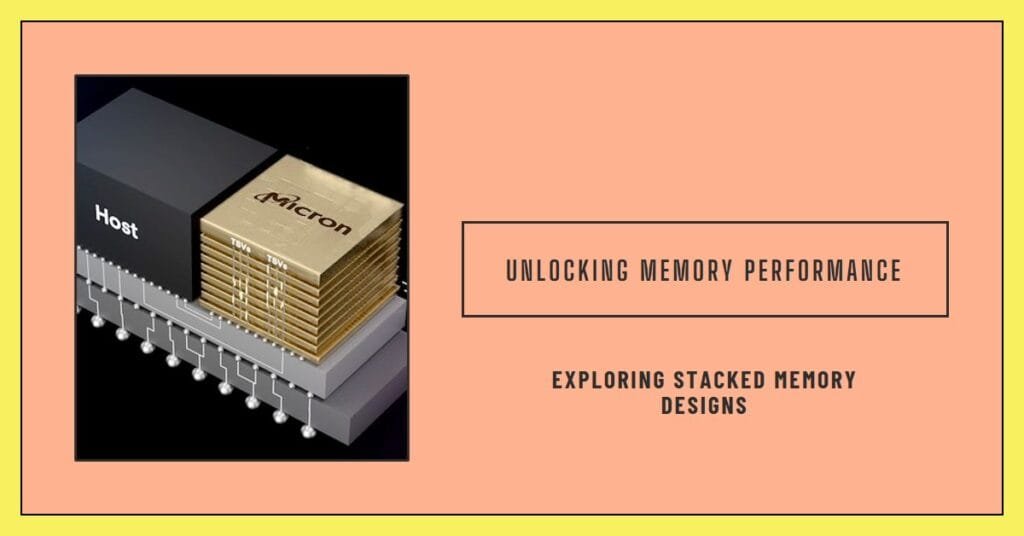

A form of DRAM (Dynamic Random-Access Memory), High Bandwidth Memory (HBM) offers quite high data transmission rates and bandwidth. hbm packaging technology divergence explained. HBM is stacked vertically in layers on a silicon substrate, unlike conventional memory systems such DDR4 and GDDR, so allowing more speed and efficiency. Applications requiring fast processing of vast volumes of data, such GPUs, artificial intelligence accelerators, and supercomputers, call especially for HBM.

Why Does HBM Packaging Matter?

Because it directly affects the memory’s performance, power consumption, and size, HBM’s packaging technology is absolutely vital. Separately from the CPU, traditional memory modules are coupled by long traces on the motherboard, which can cause delay and waste more power. By means of sophisticated 2.5D and 3D packaging technologies, which minimizes the distance between components, lowers power consumption, and boosts bandwidth, HBM packaging combines the memory stacks tightly with the processor.

Conventional Memory Packaging vs. HBM Packaging

Usually installed on the motherboard as distinct components from the CPU or GPU, traditional memory technologies including DDR4 and GDDR5 are packed in TSOP (thin Small Outline Package) or BGA (Ball Grid Array). Regarding speed, power consumption, and form factor, this method produces restrictions.

Conversely, HBM packaging uses 3D stacking—that is, memory dies are connected by Through-Silicon Vias (TSVs) stacked atop one another. Higher bandwidth and significantly shorter communication distances are therefore made possible.

The Evolution of HBM Packaging Technologies

HBM packaging evolved in response to demand for denser, quicker, more power-efficient memory solutions. The difference between CPU/GPU performance and memory bandwidth widened as computing needs developed. New packaging methods like HBM emerged when conventional memory packaging proved inadequate for these needs.

Each of the numerous incarnations of HBM packaging—HBM1, HBM2, HBM2E, and the most current HBM3—offers notable increases in terms of bandwidth, power economy, and density.

HBM’s 2.5D Packaging: A Key Innovation

Using 2.5D packaging is a fundamental HBM packaging breakthrough. 2.5.5D packaging positions the HBM stacks and processor on a silicon interposer, unlike conventional 2D packaging whereby components are side by side on a single plane. Faster data transfer and lower power consumption are made possible by this interposer, which connects the components via high-speed electrical circuits.

The memory and CPU in a 2.5D system are far closer than in conventional systems, therefore lowering latency and raising bandwidth.

TSV (Through-Silicon Via): The Backbone of HBM Packaging

Through-Silicon Via (TSV) forms the core of HBM packaging technologies. Passing through the silicon dies, TSV is a vertical electrical link that enables direct communication between stacked memory layers. The low power usage and great bandwidth HBM provides depend on this method.

By allowing numerous memory dies to be stacked on top of one another, TSVs enable HBM to reach 3D stacking—that is, a method of essentially increasing the memory density without requiring extra physical space.

Interposers in HBM Packaging

The silicon interposer is another absolutely vital part of HBM packaging. Between the processor and the HBM stacks stands a sizable silicon component called the interposer. Thousands of little electrical connections in it let the memory and the CPU communicate rapidly.

Particularly crucial for high-performance computer applications producing considerable heat, the use of a silicon interposer in 2.5D packaging lowers signal loss and improves thermal management.

HBM2 and HBM3: Pushing the Boundaries of Bandwidth

Offering even more bandwidth and better power efficiency than their predecessors, the latest versions of the HBM standard, HBM2 and HBM3, present While HBM3 is planned to extend this limit even higher, maybe reaching 6.4 Gbps per pin, HBM2 enables data rates of up to 2 Gbps per pin.

These developments make HBM2 and HBM3 perfect for uses including artificial intelligence, machine learning, and high-performance computing—where vast volumes of data must be rapidly handled.

Challenges in HBM Packaging

HBM packaging offers numerous difficulties even with its several benefits:

- Cost: Thanks to the intricacy of 2.5D and 3D stacking as well as the utilization of TSVs and interposers, HBM packaging is more costly than conventional packaging techniques.

- Thermal Management: Vertical stacking memory dies raises the possibility of thermal hotspots, which could compromise dependability and performance.

- Manufacturing Complexity: The manufacturing process gains great complexity from the precision needed to match TSVs and link several memory levels.

Advantages of HBM Packaging

HBM packaging has obviously clear advantages.

- Higher Bandwidth: Ideal for data-intensive uses, HBM provides much more bandwidth than conventional memory technologies.

- Lower Power Consumption: HBM uses less power than standard memory by cutting the distance data must travel between the memory and CPU.

- Compact Form Factor: For small devices like GPUs and AI accelerators, the 3D stacking of memory dies lets more memory density be achieved without adding more physical footprint.

Applications of HBM Packaging in Various Industries

A variety of sectors are utilizing HBM packaging technology:

- Artificial Intelligence (AI) and Machine Learning:Running machine learning algorithms and training artificial intelligence models find HBM perfect because of its low latency and great bandwidth.

- Graphics Processing Units (GPUs): High-performance GPUs often feature HBM since it offers the required memory bandwidth for real-time sophisticated graphic rendering.

- High-Performance Computing (HPC): Supercomputers need HBM to rapidly and effectively handle vast volumes of data.

How HBM Packaging Will Shape the Future of Memory Technologies

Standard memory technology will struggle to keep up as processing needs keep rising. Offering scalable, high-performance solutions that can satisfy the needs of next-generation applications including artificial intelligence, HPC, and quantum computing, HBM packaging reflects the direction of memory technology.

The Impact of HBM on AI and Machine Learning

For artificial intelligence and machine learning especially, the differences in HBM packaging technologies are crucial. Growing in complexity, models need more memory and greater bandwidth to handle data in real time. Faster training times and more efficient inference made possible by HBM packaging let autonomous systems, natural language processing, and other AI-driven technologies flourish.

HBM Packaging and Energy Efficiency

Energy economy of HBM packaging is one of its main advantages. HBM lowers the energy needed to move data by keeping the distance between the memory and CPU short. For data centers and HPC systems, where energy consumption is a major issue, HBM is therefore a desirable choice.

Conclusion: The Future of HBM Packaging

One major departure from conventional memory packing techniques is HBM packaging technology. HBM provides hitherto unheard-of degrees of bandwidth, efficiency, and compactness by using cutting-edge methods including 2.5D and 3D stacking, TSVs, and silicon interposers. Although thermal control and cost are issues, overall the benefits exceed the disadvantages.